Tech

Akhmedov Sobit

Aug 18, 2023

Scaling the Wolt Merchant App to Serve 30x More Users

Wolt is best known for getting food, fresh groceries and other items delivered to your door, quickly and reliably. To make these deliveries happen, we're building a commerce platform that connects three parties seamlessly: merchants selling the meals and items, courier partners delivering the goods and customers who place the orders.

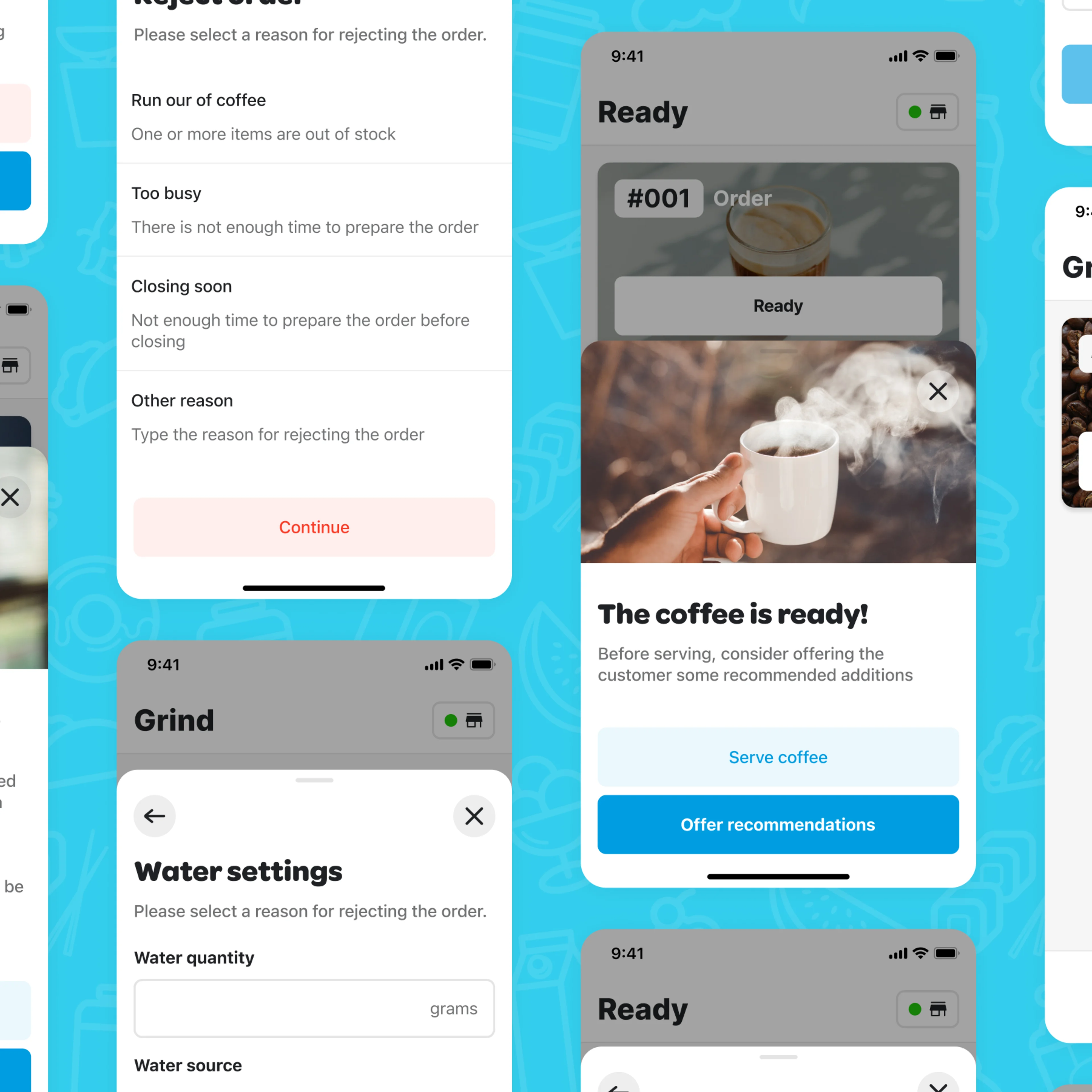

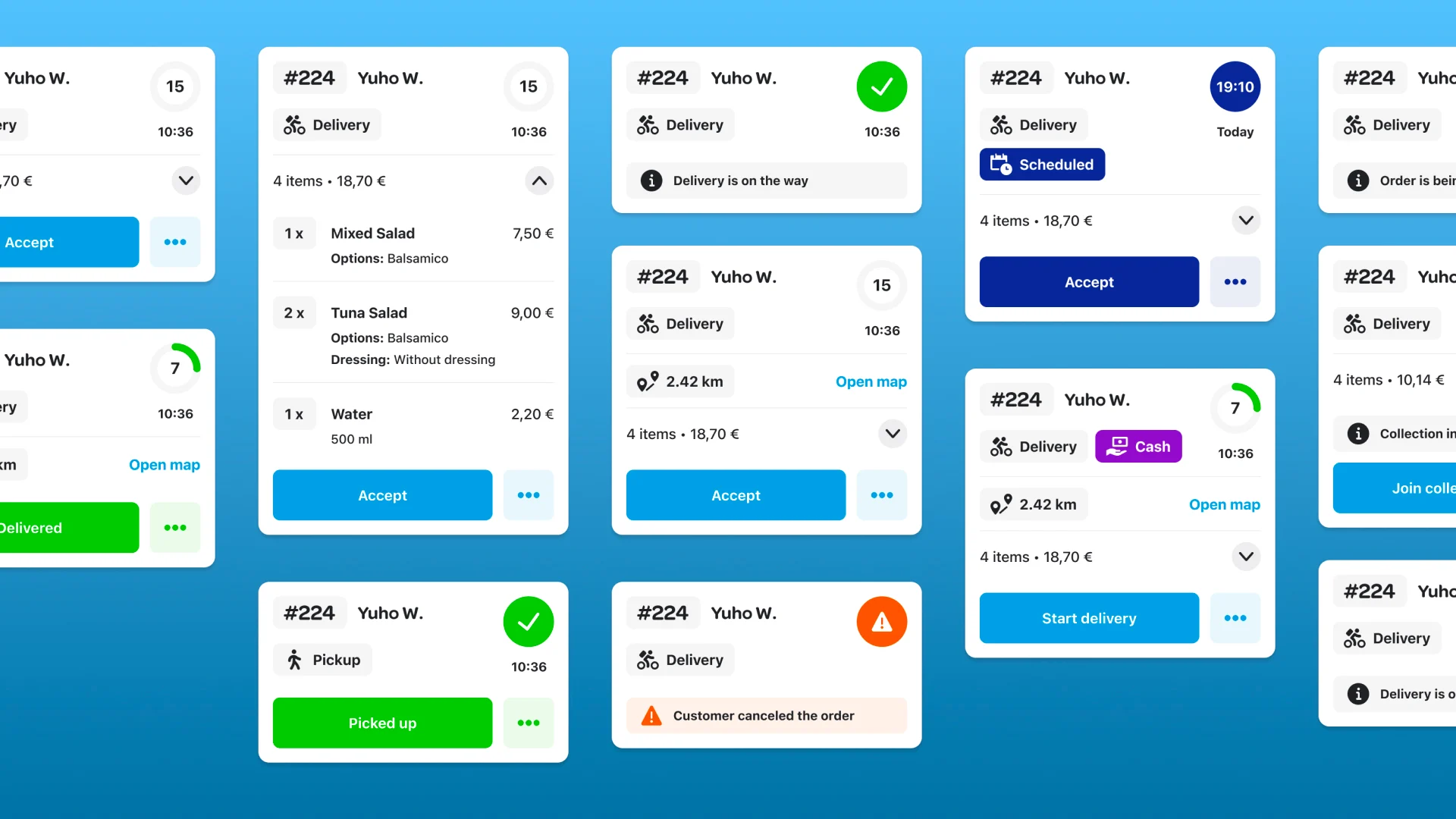

When a new merchant joins as a user of Wolt, historically we've sent them an iPad with a pre-installed iOS merchant app, which has everything they need to run their business on Wolt. Over time we learned that iPads created barriers for some merchants to onboard, and we decided to offer them a multi-platform Flutter app instead, available on the App Store and Play Store. They would then be free to use any device they want, whether it's a phone or a tablet, running on iOS or Android.

Both the original iOS and the new Flutter merchant apps had their own backends and separate infrastructure, and over time our heavy investments in the Flutter app resulted in it becoming a better alternative for merchants, offering a richer feature set and flexibility. Strategically we decided to focus less on the old app and replace it with the Flutter app on the existing iPads.

However, we faced a challenge that the new app only served 10% of all merchants, and its backend systems were never battle-tested to work with large amounts of data and users. And so we began our preparation efforts to scale the new app backend.

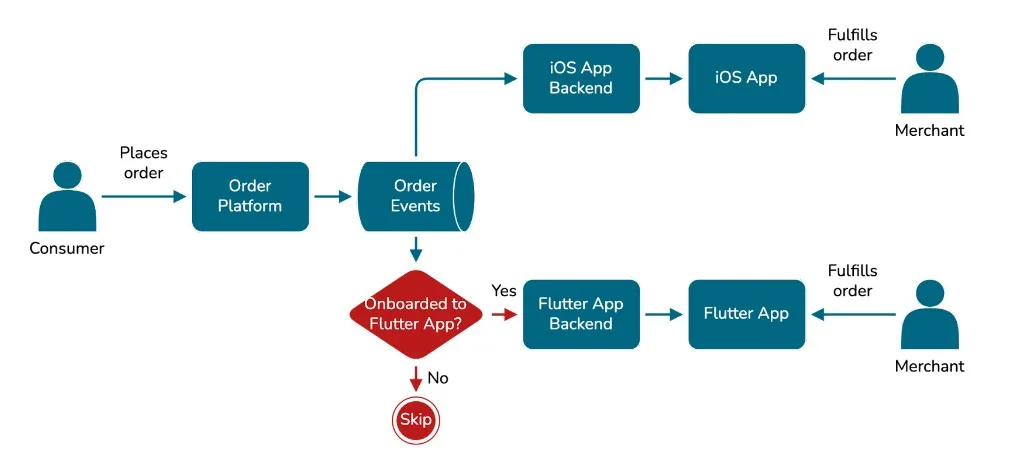

Step 1. Make Order Data Available on the Flutter App

If an existing merchant using Wolt on iPad would simply download the Flutter app and log in there, they would be surprised to find out that their orders would not appear there, and could only be seen on their iPad. This is because the Flutter app's backend filtered out Kafka events for orders in venues that weren't explicitly onboarded to the new app.

To make this transition happen, first of all, we needed to ensure no matter which app a merchant used, they would consistently see the same information about the orders. Thus, we needed to remove the filtering of order events. What it technically meant for us was that our backend would have to process 100% of all events coming from the Order Platform, in contrast to just 10% before - this would be a significant jump.

To achieve this, we refactored how we control the event filtering, replacing a binary environment variable flag with a percentage-based one, controlled with Live Config, our in-house solution to manipulate with service configuration values at runtime. We then began to dial down the filter, gradually increasing the processing of those filtered 90% of events, while carefully monitoring the load on our system and database. That was relatively straightforward, and we learned that both the service and the database were able to handle all the events just fine. We dialed it fully down on Thursday, prepared dashboards and a runbook for an on-call engineer to execute in case of problems, and kept it running until Monday. The goal was to gather more data over the weekend when we usually experience our peak order times.

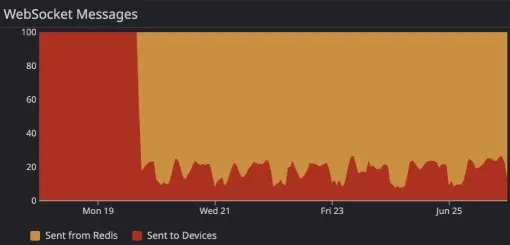

On Monday, we started receiving complaints from merchants using the Flutter app, who experienced slowness at a time that correlated with our dialing down of the order event filtering. We turned the filter back on and started investigating. The app extensively relies on WebSockets, which directly contribute to how fast the data changes on the app, and we learned that we had no means to monitor their functionality in-depth. So we invested in building comprehensive observability around WebSockets, including tracking the number of active connections, max connections per pod, and message delivery time lag. Once we had all the dashboards in place, we turned the filter off briefly and successfully verified our assumption that the WebSockets were indeed causing a degraded experience on the app. The evidence was clear as the message delivery time lag rose from 30ms to 1.5 minutes.

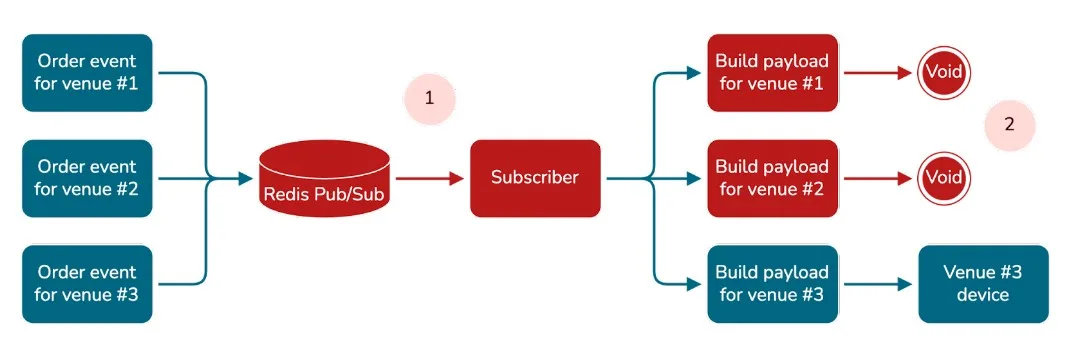

As we delved deeper into the WebSockets implementation, we identified two major bottlenecks:

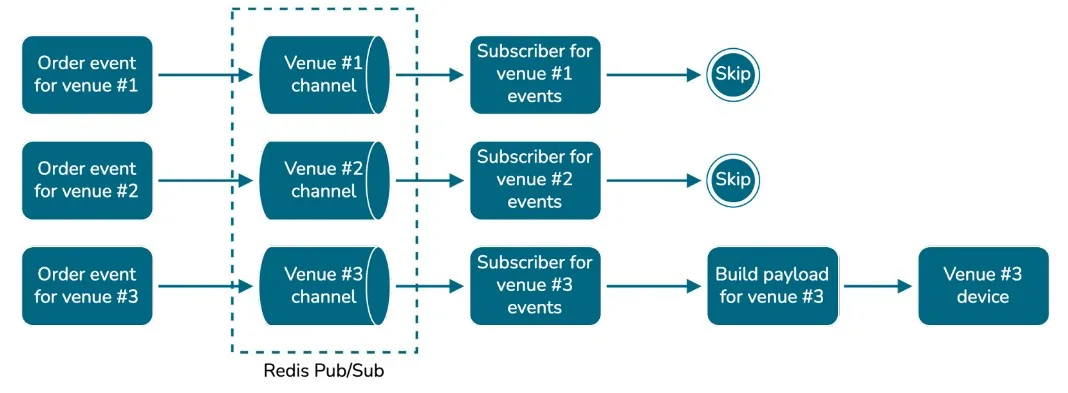

We used only one Pub/Sub channel in Redis, and only one subscriber instance listened to changes and processed them on the other side.

We ran heavy database operations to construct WebSocket message payloads for all events, regardless of whether we had a recipient for them or not.

We implemented multi-channeling in our Pub/Sub workflow, ensuring that events for the same venue end up in the same channel, and spawned 16 channels along with corresponding subscriber instances. We also began checking whether we have message recipients before we run expensive queries to build the payload.

We added additional monitoring to count the messages we received from Redis and compared them to the number actually delivered to devices. From this we learned that we didn't have to run expensive payload construction for 70% of all events.

With these scaling and optimization measures, we were able to successfully turn off the order event filtering and ultimately make order data available to any merchant willing to use the Flutter app instead of the old app.

Step 2. Ensure Data Consistency in Order Event Processing

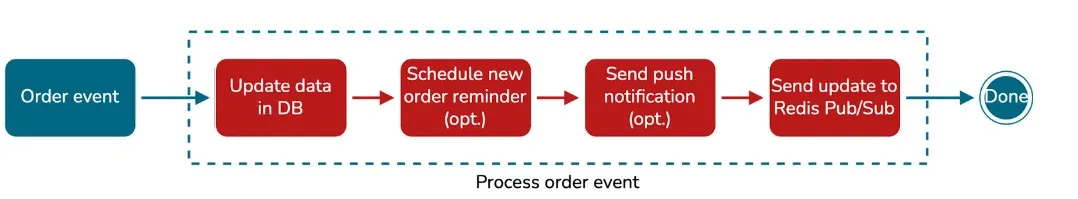

As the Flutter app backend processes incoming order events, it performs a number of operations, such as updating a local data representation in the service's PostgreSQL database, sending updates to Redis Pub/Sub, sending push notifications, and scheduling reminders for merchants to acknowledge the new order. Historically, all these operations were executed sequentially, and most of the time, things worked perfectly.

In a distributed systems environment, when a service interacts with multiple downstream systems during the request lifecycle, there's a good chance that after a specific interaction something may go wrong. When this happens, an error handling strategy has to be in place. At times, simply ignoring a failure may be alright. At other times, we may want to rollback the previously made changes in a reliable manner.

While we could live with the occasional failures when serving a small percentage of merchants, we couldn't take the same risk if we were to serve them all. Therefore, we invested in the reliability of our order processing workflows. Given the asynchronous nature of our system, which is largely based on events, we decided to make order processing workflow event-based as well.

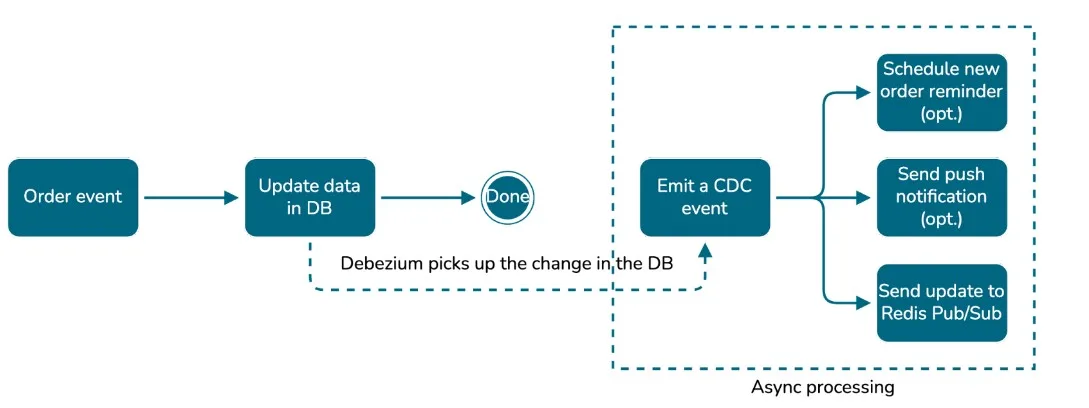

Once an order event arrived, we would only store it in the database. The database would then emit a change data capture (CDC) event regarding the operation, prompting multiple subscribers to proceed with their part of the job. If they encountered a failure for some reason, they would retry the change, since it wasn't marked as processed in Kafka.

These architectural improvements enabled us to update all the systems involved in the order event processing workflow reliably. This ensured the atomicity of the operation and guaranteed data consistency.

Step 3. Scale the Backend to Serve 100% of Merchants

Upon examining the current traffic on the Flutter app backend, we discovered that it only handled 3% of the overall expected traffic. With all order data now available for every venue, our next goal was to ensure that the backend would be fully prepared to serve all merchants as they transitioned to using the new Flutter app.

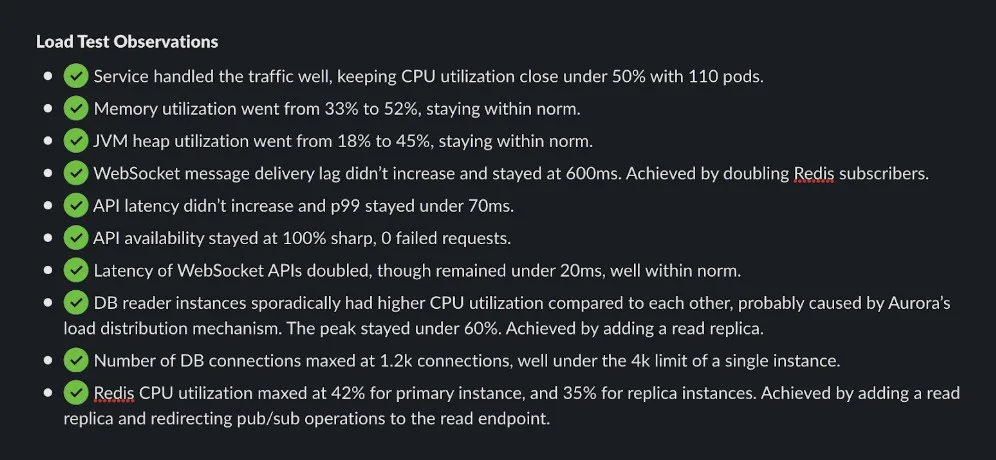

At Wolt, we have a load testing framework built on top of Gatling, designed to simulate realistic traffic and authentication. To prepare our service, we constructed a load test suite targeting the top 5 APIs, excluding those that either made downstream service calls or altered data. In collaboration with our Performance team at Wolt, we added new capabilities to the load testing framework, such as simulating load on WebSockets, and fine-tuned our service.

We ran the tests in production, and our first three load testing attempts failed:

1. In the first attempt, we learned that our internal ingress endpoint, which we used to run the load test, didn't support WebSockets, so we had to upgrade it. Additionally, we observed that we ramped up traffic too quickly, causing the service to struggle with scaling the fleet.

2. In the second attempt, we learned that the load testing fleet wasn't scaled sufficiently to simulate the traffic volume we needed. Additionally, we discovered that we had inaccurate metrics in Datadog, as we hadn't uniquely tagged them before sending, which caused unexpected data overrides. As a result, the current dashboard was unreliable, and we did not feel comfortable load testing the service with those discrepancies.

3. In the third attempt, we discovered the actual service bottlenecks. To address them, we added read replicas to our database and Redis, and increased the number of Pub/Sub channels from 16 to 32.

The fourth attempt was finally successful, concluding the 10-day period of our load testing efforts.

What's Next?

Ensuring the availability and consistency of order data, as well as the scalability and performance of the service, were crucial as we executed our vision to provide a more efficient and accessible app alternative for merchants to fulfill orders on Wolt.

But this journey is not over yet. We're constantly in touch with our merchants about their user experience and wishes, and we know that they love the old iOS app, as it offers quite a few unique experiences there that they don't want to part ways with. Now, we're laser focused on incorporating those into the new Flutter app.

The merchant app is one of the three top apps in Wolt, along with the consumer and courier partner apps. Does the vision of leveling up the experience that has been used by merchants since the very early days of Wolt, with a more feature-rich, cross-platform, cross-form-factor alternative resonate with you? If yes, we're hiring! Check out our open roles from Wolt Careers - jump on board, we saved you a seat.